I have been garnering interest in a static-site generator architecture ever since I read Paul Stamatiou’s enlightening post about how he built his website. I am always intrigued to know what goes on behind the scenes of someone's website, especially bloggers and the technology stack they use.

Paul built his website using Jekyll. In his post, he explains his reasoning to why he decided to go down this particular avenue - with great surprise resonated with me. In the past, I always felt the static-site generator architecture was too restrictive and coming from a .NET background, I felt comfortable knowing my website was built using some form of server-side code connected to a database, allowing me infinite possibilities. Building a static site just seemed like a backwards approach to me. Paul’s opening few paragraphs changed my perception:

..having my website use a static site generator for a few reasons...I did not like dealing with a dynamic website that relied on a typical LAMP stack. Having a database meant that MySQL database backups was mission critical.. and testing them too. Losing an entire blog because of a corrupt database is no fun...

...I plan to keep my site online for decades to come. Keeping my articles in static files makes that easy. And if I ever want to move to another static site generator, porting the files over to another templating system won't be as much of a headache as dealing with a database migration.

And then it hit me. It all made perfect sense!

Enter The Static Site Generator Platform

I’ll admit, I’ve come late to the static site party and never gave it enough thought, so I decided to pick up the slack and researched different static-site generator frameworks, including:

Jekyll runs on the Ruby language, Hugo on Go (invented by Google) and Gatsby on React. After some tinkering with each, I opted to invest my time in learning Gatsby. I was very tempted by Hugo, (even if it meant learning Go) as it is more stable and requires less build time which is important to consider for larger websites, but it fundamentally lacks an extensive plugin ecosystem.

Static Generator of Choice: Gatsby

Gatsby comes across as a mature platform offering a wide variety of useful plugins and tools to enhance the application build. I’m already familiar coding in React from when I did some React Native work in the past, which I haven’t had much chance to use again. Being built on React, it gave me an opportunity to dust the cobwebs off and improve both my React and (in the process) JavaScript skillset.

I was surprised by just how quickly I managed to get up and running. There is nothing you have to configure unlike when working with content-management platforms. In fact, I decided to create a Gatsby version of this very site. Within a matter of days, I was able to replicate the following website functionality:

- Listing blog posts.

- Pagination.

- Filtering by category and tag.

- SEO - managing page titles, description, open-graph tags, etc.

There I such a wealth of information and support online to help you along.

I am very tempted to move over to Gatsby.

When to use Static or Dynamic?

Static site generators isn’t a framework that is suited for all web application scenarios. It’s more suited for small/medium-sized sites where there isn't a requirement for complex integrations. It works best with static content that doesn’t require changes to occur based on user interaction.

The only thing that comes into question is the build time where you have pages of content in their thousands. Take Gatsby, for example...

I read one site containing around 6000 posts, resulting in a build time of 3 minutes. The build time can vary based on the environment Gatsby is running on and build quality. I personally try to ensure best case build time by:

- Sufficiently spec'd hardware is used - laptop and hosting environment.

- Keeping the application lean by utilising minimal plugins.

- Write efficient JavaScript.

- Reusing similar GraphQL queries where the same data is being requested more than once in different components, pages and views.

We have to accept the more pages a website has, the slower the build time will be. Hugo should get an honourable mention here as the build speed beats its competition hands down.

Static sites have their place in any project as long as you conform within the confines of the framework. If you have a feeling that your next project will at some point (or immediately) require some form of fanciful integration, dynamic is the way to go. Dynamic gives you unlimited possibilities and will always be the safer option, something static will never measure against.

The main strengths of static sites are that they’re secure and perform well in Lighthouse scoring potentially resulting favourably in search engines.

Avenue’s for Adding Content

The very cool thing is you have the ability to hook up to your content via two options:

- Markdown files

- Headless CMS

Markdown is such a pleasant and efficient way to write content. It’s all just plain text written with the help of a simplified notation that is then transformed into HTML. The crucial benefit of writing in markdown is its portability and clean output. If in the future I choose to jump to a different static framework, it’s just a copy and paste job.

A more acceptable client solution is to integrate with a Headless CMS where a more familiar Rich Text content editing and the storage of media is available to hand.

You can also create custom-built pages without having to worry about the data layer, for example, landing pages.

Final Thoughts

I love Gatsby and it’s been a very long time since I have been excited by a different approach to developing websites. I am very tempted to make the move as this framework is made for sites like mine, providing I can get solutions to areas in Gatsby where I currently lack knowledge, such as:

- Making URL’s case-insensitive.

- 301 redirects.

- Serving different responsive images within the post content. I understand Gatsby does this at templating-level but cannot currently see a suitable approach for media housed inside content.

I’m sure the above points are achievable and as I have made quite swift progress on replicating my site in Gatsby, if all goes to plan, I could go the full hog. Meaning I don’t plan on serving content from any form of content-management system and cementing myself in Gatsby.

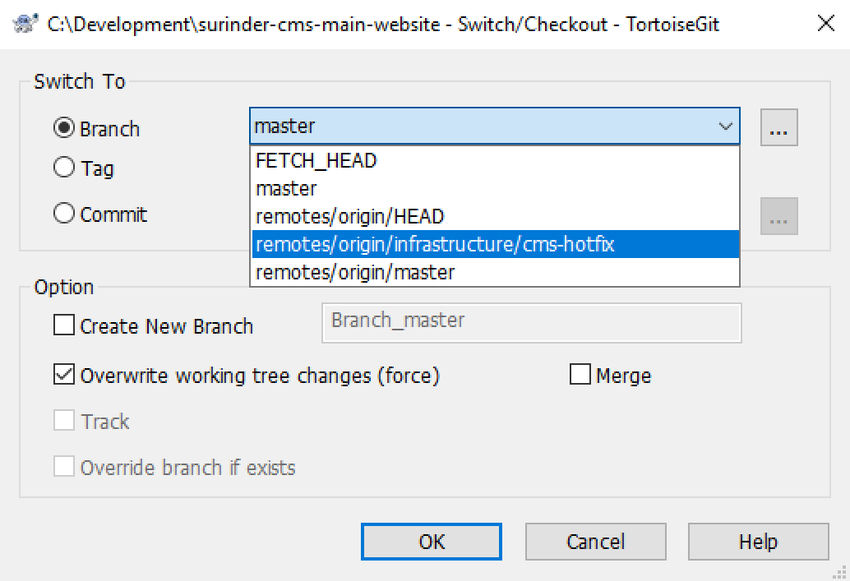

At one point I was planning on moving over to a headless CMS, such as Kontent or Prismic. That plan was swiftly scrapped when there didn’t seem to be an avenue of migrating my existing content unless a Business or Professional plan is purchased, which came to a high cost.

I will be documenting my progress in follow up posts. So watch this space!