It wasn’t until today I found that the Safari browser used on iPad and iPhone caches page functionality to such an extent that it stops the intended functionality. So much so, it affects the user experience. I think Apple has gone a step too far in making their browser uber efficient to minimise page loading times.

It wasn’t until today I found that the Safari browser used on iPad and iPhone caches page functionality to such an extent that it stops the intended functionality. So much so, it affects the user experience. I think Apple has gone a step too far in making their browser uber efficient to minimise page loading times.

We can accept browsers will cache style-sheets and client side scripts. But I never expected Safari to go as far as caching responses from web services. This is a big issue. So something as simple as the following will have issues in Safari:

// JavaScript function calling web service

function GetCustomerName(id)

{

var name = "";

$.ajax({

type: "POST",

url: "/Internal/ShopService.asmx/GetCustomerName",

data: "{ 'id' : '" + id + "' }",

contentType: "application/json; charset=utf-8",

dataType: "json",

cache: false,

success: function (result) {

var data = result.d;

name = data;

},

error: function () {

},

complete: function () {

}

});

return name;

}

//ASP.NET Web Service method

[WebMethod]

public string GetCustomerName(int id)

{

return CustomerHelper.GetFullName(id);

}

In the past to ensure my jQuery AJAX requests were not cached, the “cache: false” option within the AJAX call normally sufficed. Not if you’re making POST web service requests. It’s only until recently I found using “cache:false” option will not have an affect on POST requests, as stated on jQuery API:

“Pages fetched with POST are never cached, so the cache and ifModified options in jQuery.ajaxSetup() have no effect on these requests.”

In addition to trying to fix the problem by using the jQuery AJAX cache option, I implemented practical techniques covered by the tutorial: How to stop caching with jQuery and JavaScript.

Luckily, I found an informative StackOverflow post by someone who experienced the exact same issue a few days ago. It looks like the exact same caching bug is still prevalent in Apple’s newest operating system, iOS6*. Well you didn’t expect Apple to fix important problems like these now would you (referring to Map’s fiasco!). The StackOverflow poster found a suitable workaround by passing a timestamp to the web service method being called, as so (modifying code above):

// JavaScript function calling web service with time stamp addition

function GetCustomerName(id)

{

var timestamp = new Date();

var name = "";

$.ajax({

type: "POST",

url: "/Internal/ShopService.asmx/GetCustomerName",

data: "{ 'id' : '" + id + "', 'timestamp' : '" + timestamp.getTime() + "' }", //Timestamp parameter added.

contentType: "application/json; charset=utf-8",

dataType: "json",

cache: false,

success: function (result) {

var data = result.d;

name = data;

},

error: function () {

},

complete: function () {

}

});

return name;

}

//ASP.NET Web Service method with time stamp parameter

[WebMethod]

public string GetCustomerName(int id, string timestamp)

{

string iOSTime = timestamp;

return CustomerHelper.GetFullName(id);

}

The timestamp parameter doesn’t need to do anything once passed to web service. This will ensure every call to the web service will never be cached.

*UPDATE: After further testing it looks like only iOS6 contains the AJAX caching bug.

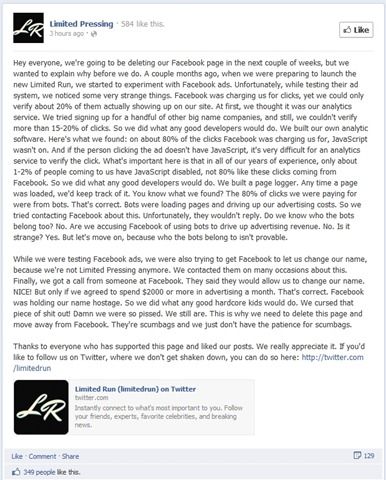

If I need to login and authenticate a Facebook user in my ASP.NET website, I either use the Facebook Connect's JavaScript library or

If I need to login and authenticate a Facebook user in my ASP.NET website, I either use the Facebook Connect's JavaScript library or