Ever since I’ve been forced to work from home over the last 3 months, I noticed in the first few weeks of the coronavirus lockdown my network performance has been subpar. Not ideal when a stable internet connection is ones only gateway to the outside world and enable you to work from home.

My current network setup is quite simple and consists of:

- ISP router - set to modem mode

- Billion 7800DXL wireless router

- Synology 4-bay NAS

- Wireless Access Point

- Wireless Security Camera

The bottle-neck out of the whole setup is the Billion router. It’s not a basic router by any means and it has served me well since I last upgraded my network back in 2014. After being in use 24/7 over the last 6 years, signs of wear were starting to show. My internet connection would just randomly drop or wind to a halt. Carrying out a factory reset did not resolve the connection stability. Next step was to check for the latest firmware, but it seems that Billion has a really short firmware release cycle - not something you’d expect for a router costing just under £200. The last firmware was released in 2015, which I had already installed.

I had to make a decision to either waste time on faffing around with the Billion router or look for a replacement. I decided on the latter.

UniFi Dream Machine Router

I knew straight-away what router I wanted to purchase - UniFi Dream Machine by Ubiquiti. I was sold on the name alone!

Ubiquiti are known for making high-quality network solutions that are suitable for consumers and businesses alike. You can start off with a small setup based on your infrastructure needs knowing at a later point (if needed) you have the option to purchase additional hardware and upscale your network. From the reviews I’ve read online, the company really makes nice hardware that can seamlessly integrate with one another - part of the Ubiquiti eco-system.

I was so tempted to go overkill on my new network set up just so I could do some additional tinkering, but the Dream Machine Router provides all the functionality I need and more.

Form Factor

The Dream Machine isn’t like any other router I’ve purchased previously where the form factor has been a boring horizontal slab with two or three antennae poking out - a piece of hardware I would always hide away in my cabinet. But the Dream Machine is a nice looking piece of kit and even comes across very applesque. It can stand proud and upright in full view for all to see. Plus it has has a really cool blue ring light.

It’s definitely heavier than any of my previous routers, which isn’t surprising from the amount of tech being crammed into this oversized mint tic tac, containing:

- ARM Cortex Processor

- Cooling Fan

- 4 Port Gigabit Switch

- Integrated Wireless Antenna 2.4/5GHz

Setup

I love the fact that from the moment you take the router out the box and connect to mains, you can literally get online and everything set up in no longer than 10 mins all from within the UniFi Network Controller mobile app. I don’t think I’ve ever been so excited to go through setting up a network device before. The mobile app makes things really simple that even my parents wouldn’t have a problem in carrying out the setup steps. I think I spent more time thinking about what I should call my wireless network. :-)

I won’t go into too much detail here on the setup steps, but they consist of the following:

- Find and connect to the device via Bluetooth.

- Create a UI.com account, or login using your existing credentials.

- Set auto-optimise settings.

- Setup Wi-Fi network.

- Set a firmware update schedule.

- Perform network speed test to dial in with the speeds provided by your ISP.

Once those steps are carried out, you just need to let the device go through its configuration process. Once complete, you can join the network wirelessly.

If in the future, you decide to expand your network with additional UniFi devices, the setup process will be the same.

“Prosumer” Configuration/Monitoring

For a device that costs around £300, don’t think for one second the UniFi mobile app is the only route to making configuration changes. The mobile app is a protective bubble for the standard consumer who just wants a secure and reliable wireless setup without being too exposed to the inner workings. If I didn’t have a home NAS and didn’t feel the need to control how certain wireless access points could connect to devices, the mobile app would have more than sufficed.

Phew! The UniFi Network Controller App is telling me "Everything is great!".

I get a real kick out of seeing the vast array of network analytics and see how my internet usage has increased since working from home. You have at your disposal overall statistics on hardware performance, internet speed, threat maps, device and application usage to name a few.

If like me, you require more control over your network, this can be done by logging into the web interface, which is just as intuitive as the mobile app. Here I was able to configure port forwarding, network groups, firewall and guest network. Trust me, there is a tonne more configuration options you can change really easily making you feel like a network pro!

Security

In addition to wanting a more stable and reliable network device, security also played a big factor in the reason why I purchased a Ubiquiti device.

Unlike all the routers I’ve had in the past that probably only received 2-3 updates in their lifetime, Ubiquiti has turned that on its head. By just looking at their software release page, it’s a hive on activity. Up-to-date firmware enhances the longevity of the device by fixing any possible vulnerabilities as well as ensuring the device continues to function at its optimum level. To ensure you are always running the most up-to-date firmware, Ubiquiti have made the process very easy. The device will automatically install newly released firmware automatically based on your set schedule.

There is also an option to enable Threat Management (currently in beta) that will protect your network from attacks, malware and malicious activity. Does this feature slow down the incoming traffic? The answer is no. The device has a whopping 850Mbps throughput limit. Amazing!

Conclusion

I purchased the Ubiquiti Dream Machine a couple of weeks into the start of the Covid-19 lockdown and I’m happy to report my network is more speedy then I could’ve hoped for. This is something you immediately notice when performing large file downloads/uploads. In fact, my parents log onto my Synology remotely and even they have noted an improvement.

I’ll admit even after numerous research before making the purchase, I was still questioning whether spending such a large amount on a wireless router was worth it. But this concern was soon quashed knowing I have a piece of hardware that is more future-proof than what its competitors are currently offering and can later tie into a larger network architecture when needed.

By buying this router you’ll be living the network dream!

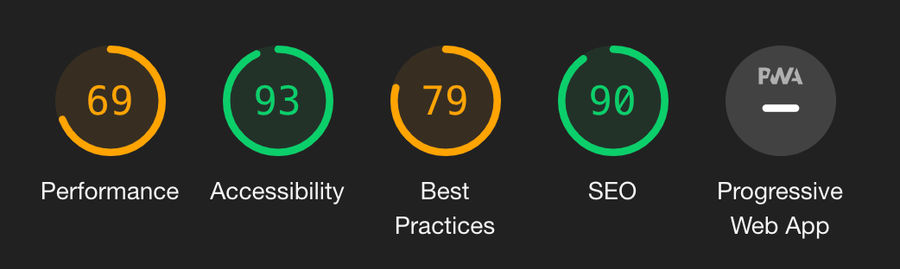

Current site

Current site Gatsby site

Gatsby site