A couple of weeks ago, I encountered an issue where for no reason one of my regularly running Azure Functions stopped. One of the first things I do when such an event arises is to manually run the function through the "Code + Test" interface to give me an idea of the severity of the issue and whether I can replicate the error reported within Application Insights.

It's only then I noticed the following message was displayed above my list of functions that were never present before:

Your app is currently in read only mode because you are running from a package file. To make any changes update the content in your zip file and WEBSITE_RUN_FROM_PACKAGE app setting.

I was unable to even start the Azure Function manually. I can't be too sure whether the above message was the reason for this. Just seemed too coincidental that everything came to a halt based on a message I've never seen before. However, in one of Christos Matskas blog posts, he writes:

Once our Function code is deployed, we can navigate to the Azure portal and test that everything’s working as expected. In the Azure Function app, we select the function that we just deployed and choose the Code+Test tab. Our Function is read-only as the code was pre-compiled but we can test it by selecting the Test/Run menu option and hitting the Run button.

So an Azure Function in read-only mode should have no trouble running as normal.

One of the main suggestions was to remove the WEBSITE_RUN_FROM_PACKAGE configuration setting or update its value to "0". This had no effect in getting the function back to working form.

Suspected Cause

I believe there is a bigger underlying issue at play here as having an Azure Function in read-only mode should not affect the overall running of the function itself. I suspect it's from when I published some updates using the latest version of Visual Studio (2022), where there might have been a conflict in publish profile settings set by an older version. All previous releases were made by the 2019 edition.

Solution

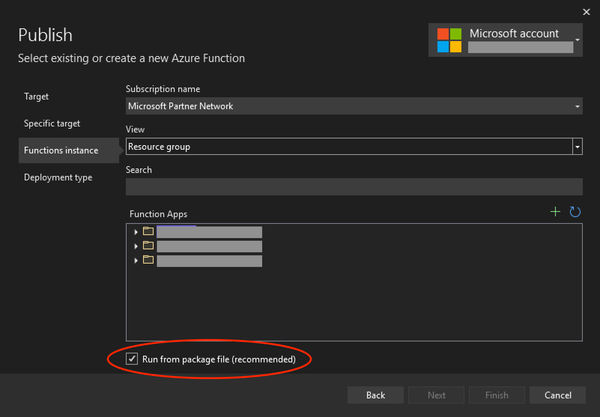

It would seem my solution differs from the similar recommended solutions I've seen online. In my case, all I had to do is recreate my publish profile in Visual Studio and unselect the "Run from package file (recommended)" checkbox.

When a republish was carried out using the updated publish profile, the Azure Function functioned as normal.

Conclusion

This post primarily demonstrates how a new publish profile may need to be created if using a newer version of Visual Studio, in addition, an approach to remove the "read only" state from an Azure Function.

However, I have to highlight that it's recommended to use "run from package" as it provides several benefits, such as:

- Reduces the risk of file copy locking issues.

- Can be deployed to a production app (with restart).

- You can be certain of the files that are running in your app.

- May reduce cold-start times.

I do plan on looking into this further as any time I attempted to "run from package" none of my Azure Functions ran. Next course of action is to create a fresh Azure Function instance within the Azure Portal to see if that makes any difference.