.NET Library To Retrieve Twitpic Images

I’ve been working on a .NET library to retrieve all images from a users Twitpic account. I thought it would be quite a useful .NET library to have since there have been some users requesting one (including me) on some websites and forums.

I will note that this is NOT a completely functioning Twitpic library that makes use of all API requests that have been listed on Twitpic’s developer site. Currently, the library only contains core integration on returning information of a specified user (users/show), enough to create a nice picture gallery.

My Twitpic .NET library will return the following information:

- ID

- Twitter ID

- Location

- Website

- Biography

- Avatar URL

- Image Timestamp

- Photo Count

- Images

Code Example:

private void PopulateGallery()

{

var hasMoreRecords = false;

//Twitpic.Get(<username>, <page-number>)

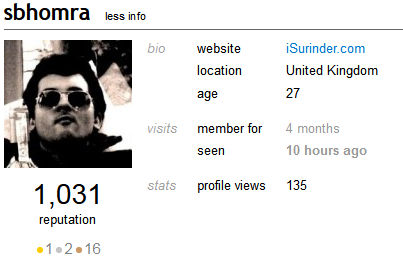

TwitpicUser tu = Twitpic.Get("sbhomra", 1);

if (tu != null)

{

if (tu.PhotoCount > 20)

hasMoreRecords = true;

if (tu.Images != null && tu.Images.Count > 0)

{

//Bind Images to Repeater

TwitPicImages.DataSource = tu.Images;

TwitPicImages.DataBind();

}

else

{

TwitPicImages.Visible = false;

}

}

else

{

TwitPicImages.Visible = false;

}

}

From using the code above as a basis, I managed to create a simple Photo Gallery of my own: /Photos.aspx

If you experience any errors or issues, please leave a comment.

Download: iSurinder.TwitPic.zip (5.15 kb)