Generate Code Name For Tags In Kentico

With every Kentico release that goes by, I am always hopeful that they will somehow add code name support to Tags where a unique text-based identifier is created, just like Categories (via CategoryName field). I find the inclusion of code names very useful when used in URL as wildcards to filter a list of records, such as blog posts.

In a blog listing page, you'll normally have the ability to filter by both category or tag and to make things nice for SEO, we include them in our URLs, for example:

- /Blog/Category/Kentico

- /Blog/Tag/Kentico-Cloud

This is easy to carry out when dealing with categories as every category you create has "CategoryName" field, which strips out any special characters and is unique, fit to use in slug form within a URL! We're not so lucky when it comes to dealing with Tags. In the past, to allow the user to filter my blog posts by tag, the URL was formatted to look something like this: /Blog/Tag/185-Kentico-Cloud, where the number denotes the Tag ID to be parsed into my code for querying.

Not the nicest form.

The only way to get around this was to customise how Kentico stores its tags on creation and update, without impacting its out-of-the-box functionality. This could be done by creating a new table that would store newly created tags in code name form and link back to Kentico's CMS_Tag table.

Tag Code Name Table

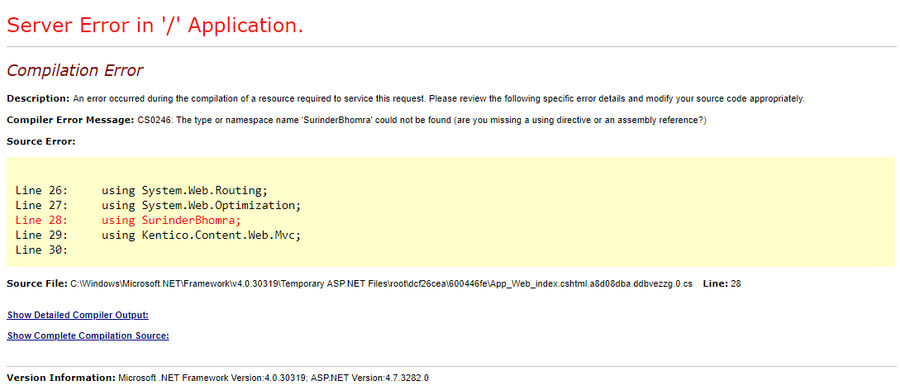

The approach on how you'd create your table is up to you. It could be something created directly in the database, a custom table or module. I opted to create a new class name under one of my existing custom modules that groups all site-wide functionality. I called the table: SurinderBhomra_SiteTag.

The SurinderBhomra_SiteTag consists of the following columns:

- SiteTagID (int)

- SiteTagGuid (uniqueidentifier)

- SiteTagLastModified (datetime)

- TagID (int)

- TagCodeName (nvarchar(200))

If you create your table through Kentico, the first four columns will automatically be generated. The "TagID" column is our link back to the CMS_Tag table.

Object and Document Events

Whenever a tag is inserted or updated, we want to populate our new SiteTag table with this information. This can be done through ObjectEvents.

public class ObjectGlobalEvents : Module

{

// Module class constructor, the system registers the module under the name "ObjectGlobalEvents"

public ObjectGlobalEvents() : base("ObjectGlobalEvents")

{

}

// Contains initialization code that is executed when the application starts

protected override void OnInit()

{

base.OnInit();

// Assigns custom handlers to events

ObjectEvents.Insert.After += ObjectEvents_Insert_After;

ObjectEvents.Update.After += ObjectEvents_Update_After;

}

private void ObjectEvents_Insert_After(object sender, ObjectEventArgs e)

{

if (e.Object.TypeInfo.ObjectClassName.ClassNameEqualTo("cms.tag"))

{

SetSiteTag(e.Object.GetIntegerValue("TagID", 0), e.Object.GetStringValue("TagName", string.Empty));

}

}

private void ObjectEvents_Update_After(object sender, ObjectEventArgs e)

{

if (e.Object.TypeInfo.ObjectClassName.ClassNameEqualTo("cms.tag"))

{

SetSiteTag(e.Object.GetIntegerValue("TagID", 0), e.Object.GetStringValue("TagName", string.Empty));

}

}

/// <summary>

/// Adds a new site tag, if it doesn't exist already.

/// </summary>

/// <param name="tagId"></param>

/// <param name="tagName"></param>

private static void SetSiteTag(int tagId, string tagName)

{

SiteTagInfo siteTag = SiteTagInfoProvider.GetSiteTags()

.WhereEquals("TagID", tagId)

.TopN(1)

.FirstOrDefault();

if (siteTag == null)

{

siteTag = new SiteTagInfo

{

TagID = tagId,

TagCodeName = tagName.ToSlug(), // The .ToSlug() is an extenstion method that strips out all special characters via regex.

};

SiteTagInfoProvider.SetSiteTagInfo(siteTag);

}

}

}

We also need to take into consideration when a document is deleted and carry out some cleanup to ensure tags no longer assigned to any document are deleted from our new table:

public class DocumentGlobalEvents : Module

{

// Module class constructor, the system registers the module under the name "DocumentGlobalEvents"

public DocumentGlobalEvents() : base("DocumentGlobalEvents")

{

}

// Contains initialization code that is executed when the application starts

protected override void OnInit()

{

base.OnInit();

// Assigns custom handlers to events

DocumentEvents.Delete.After += Document_Delete_After;

}

private void Document_Delete_After(object sender, DocumentEventArgs e)

{

TreeNode doc = e.Node;

TreeProvider tp = e.TreeProvider;

GlobalEventFunctions.DeleteSiteTags(doc);

}

/// <summary>

/// Deletes Site Tags linked to CMS_Tag.

/// </summary>

/// <param name="tnDoc"></param>

private static void DeleteSiteTags(TreeNode tnDoc)

{

string docTag = tnDoc.GetStringValue("DocumentTags", string.Empty);

if (!string.IsNullOrEmpty(docTag))

{

foreach (string tag in docTag.Split(','))

{

TagInfo cmsTag = TagInfoProvider.GetTags()

.WhereEquals("TagName", tag)

.Column("TagCount")

.FirstOrDefault();

// If the the tag is no longer stored, we can delete from SiteTag table.

if (cmsTag?.TagCount == null)

{

List<SiteTagInfo> siteTags = SiteTagInfoProvider.GetSiteTags()

.WhereEquals("TagCodeName", tag.ToSlug())

.TypedResult

.ToList();

if (siteTags?.Count > 0)

{

foreach (SiteTagInfo siteTag in siteTags)

SiteTagInfoProvider.DeleteSiteTagInfo(siteTag);

}

}

}

}

}

}

Displaying Tags In Page

To return all tags linked to a page by its "DocumentID", a few of SQL joins need to be used to start our journey across the following tables:

- CMS_DocumentTag

- CMS_Tag

- SurinderBhomra_SiteTag

Nothing Kentico's Object Query API can't handle.

/// <summary>

/// Gets all tags for a document.

/// </summary>

/// <param name="documentId"></param>

/// <returns></returns>

public static DataSet GetDocumentTags(int documentId)

{

DataSet tags = DocumentTagInfoProvider.GetDocumentTags()

.WhereID("DocumentID", documentId)

.Source(src => src.Join<TagInfo>("CMS_Tag.TagID", "CMS_DocumentTag.TagID"))

.Source(src => src.Join<SiteTagInfo>("SurinderBhomra_SiteTag.TagID", "CMS_DocumentTag.TagID"))

.Columns("TagName", "TagCodeName")

.Result;

if (!DataHelper.DataSourceIsEmpty(tags))

return tags;

return null;

}

Conclusion

We now have our tags working much like categories, where we have a display name field (CMS_Tag.TagName) and a code name (SurinderBhomra_SiteTag.TagCodeName). Going forward, any new tags that contain spaces or special characters will be sanitised and nicely presented when used in a URL. My blog demonstrates the use of this functionality.